Generated API Gateway

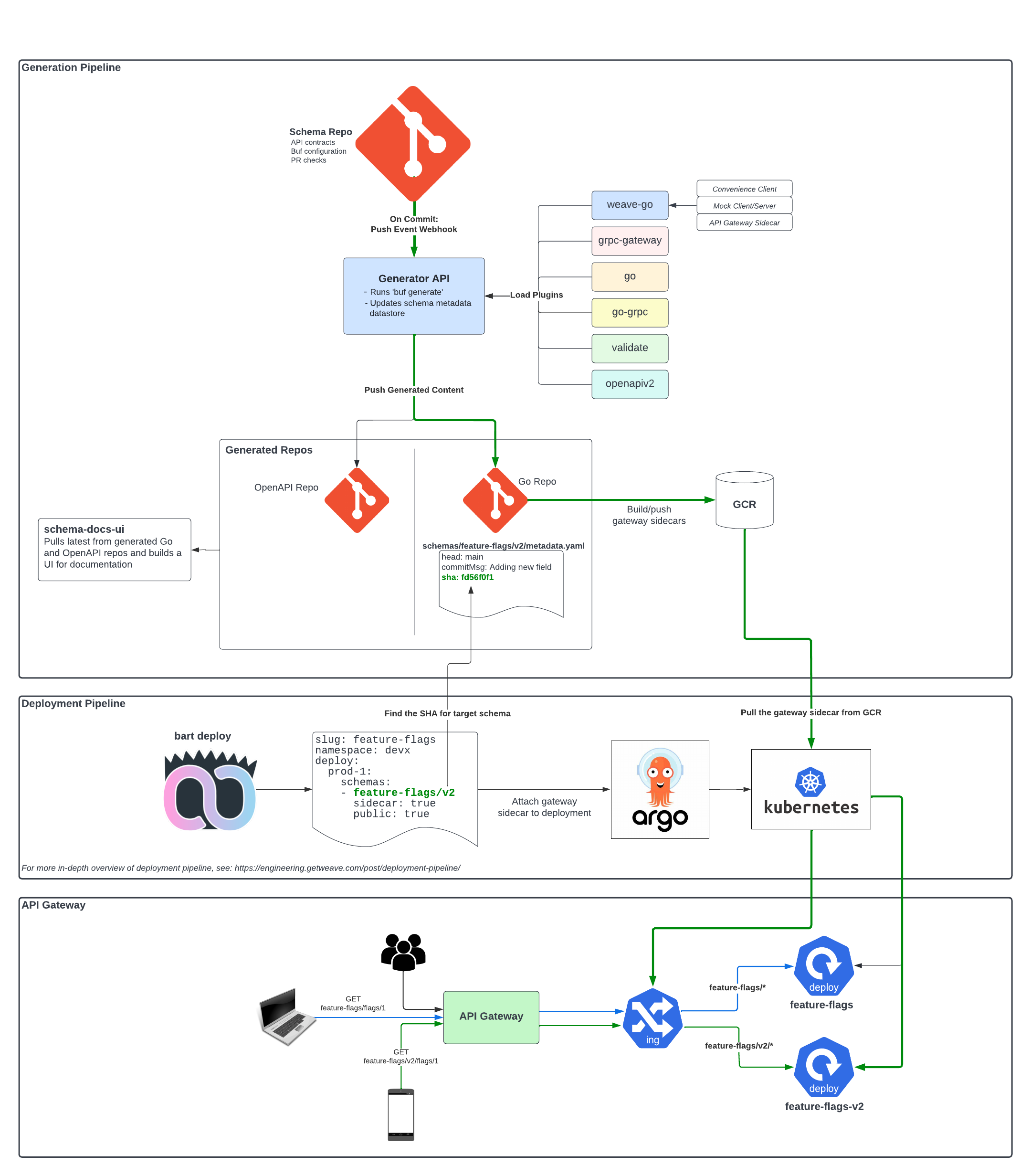

In part 1 of this post, I did an in-depth walk

through of how we built our auto-generated API gateway. Included in that post was a brief description of how our

custom protoc generator, protoc-gen-weave-go, generates the actual gateway code and middlewares for each API, or “schema”.

This post will extend on that and do a deeper dive into how that generator works with our internal package, wgateway,

to glue all the pieces of our API gateway together.

We refer to services that are exposed via our API gateway and defined in our centralized proto repository as “schemas”. For this post, I will continue that terminology.

Quick review

For a more in-depth review of how our API gateway and gRPC generation system is set up, please see part 1 of this post.

Schema Routing

Our centralized “schema” repository, contains all of our API specifications in protobuf form. We have a special directory,

“weave/schemas”, that serves as the root of our API gateway URL. For example, in the following example directory structure,

we would have APIs accessible at <api-gateway-domain>/feature-flags and <api-gateway-domain>/feature-flags/v2:

weave

└── schemas # ROOT OF API GATEWAY

└── feature-flags # v1

└── feature-flags.proto

└── v2

└── feature-flags.proto

Generated Content

For any given schema, all the following would be autogenerated when commits are pushed to the schema repository:

schemas

└── feature-flags

└── v2

└── gateway

└── main.go # gateway sidecar

├── feature_flags.pb.client.go # custom client initializer

├── feature_flags.pb.client.mock.go # custom client mock

├── feature_flags.pb.client.mock_test.go # custom client mock tests

├── feature_flags.pb.go

├── feature_flags.pb.gw.go

├── feature_flags.pb.server.go # custom server initializer

├── feature_flags.pb.server.mock.go # custom server mock

├── feature_flags.pb.validate.go

├── feature_flags_grpc.pb.go

├── gateway.pb.go # custom gateway initializer

└── metadata.yaml # metadata with 'schema' repo commit information

Looking at the generated content above for the feature-flags/v2 API, we have the usual files generated from upstream

plugins such as:

protoc-gen-go -> feature_flags.pb.go

protoc-gen-go-grpc -> feature_flags_grpc.pb.go

protoc-gen-grpc-gateway -> feature_flags.pb.gw.go

protoc-gen-validate -> feature_flags.pb.validate.go

But, there are additional files that were generated from our custom protoc-gen-weave-go plugin:

- feature_flags.pb.client.go

- feature_flags.pb.client.mock.go

- feature_flags.pb.client.mock_test.go

- feature_flags.pb.server.go

- gateway.pb.go

We use Go templates to generate the contents for all of these files. Most of these files with the feature_flags.pb* prefix

are largely statically generated with very few inputs such as the Go package name and proto “Service” name. For example,

here is roughly what the Go template looks like that would generate the feature_flags.pb.client.go file:

package {{ .Package }}

import (

"context"

"google.golang.org/grpc"

)

// ConvenienceClient wraps the {{ .Service.Name }}Client to allow method overloading

type ConvenienceClient struct {

{{ .Service.Name }}Client

conn *grpc.ClientConn

}

// NewClient initializes a new ConvenienceClient for the {{ .Service.Name }} schema

func NewClient(ctx context.Context) (*ConvenienceClient, error) {

// initialize gRPC connection as 'conn'

return &ConvenienceClient{New{{ .Service.Name }}Client(conn), conn}, nil

}

// Close closes the underlying grpc client connection

func (c *ConvenienceClient) Close() error {

return c.conn.Close()

}

{{- $exampleMethod := (index .Service.Methods 0) }}

/*

This is an example of how you can overload the {{ $exampleMethod.Name }} method from

the generated {{ .Service.Name }}Client.

All overloading and any additional methods added to this 'ConvenienceClient' should be

added in other files within this package and should NOT contain the 'pb' prefix which

signifies auto-generated files that could be overwritten.

func (c *ConvenienceClient) {{ $exampleMethod.Name }}(ctx context.Context, {{ getMethodParamStr $exampleMethod.Input }}) (*{{ $exampleMethod.Output.Name }}, error) {

return c.{{ .Service.Name }}Client.{{ $exampleMethod.Name }}(ctx, {{ getClientRequestStr $exampleMethod.Input }})

}

*/

This makes it very nice for any service from within our infrastructure to talk to this service by simply importing this

auto-generated package and calling its NewClient function. The other files with the feature_flags.pb* prefix are

similar to this; there is not really anything super ground-breaking happening. They are all just simple Go templates,

with a small dataset that was populated from the parsed protobuf file.

The fun really begins with the gateway.pb.go file. This is the file that ties all the generated Go code together with the

routes and custom options provided in the proto file. The auto-generated code within this file is what makes everything

work. So, let’s dive in!

Generated Gateway File

The pieces that make up this file are:

Both of these pieces use our custom package, wgateway, to configure an app to run as a “Gateway” service. That simply translates to: a service that is accessible through our API gateway that uses grpc-gateway.

wgateway is a wrapper we built on top of grpc-gateway’s runtime.ServeMux that allows us to provide options to its initialization to customize its functionality. A few of these custom options include:

- Port addresses

- Specific marshal functionality

- Middlewares

runtime.ServeMuxoptions- gRPC interceptors & dial options

- Per-endpoint authentication & ACLs

Most of these are supported through custom options in our protobuf declarations. When we parse a proto file, we look for

these specific options, and use that data when generating the gateway.pb.go file. Specific examples of how this is

accomplished during Gateway Initialization and Routing Configuration

are outlined below.

Gateway Initialization

The initialization of a wgateway.Gateway returns a type that is configured to run the grpc-gateway proxy for a

service. It can be run as a sidecar in a deployment, or it can be run directly inside the main app’s container in a

deployment. That does not matter. The initialization of a new wgateway.Gateway is the same in both scenarios.

The generated code to initialize a new wgateway.Gateway looks something like this:

feature-flags/gateway.pb.go

// Code generated by protoc-gen-weave-go. DO NOT EDIT.

// Gateway is a personalized gateway for the FeatureFlags service

type Gateway struct {

*wgateway.Gateway

}

// NewGateway returns an initialized Gateway with the provided options for the FeatureFlags schema

func NewGateway(ctx context.Context, opts ...wgateway.GatewayOption) (*Gateway, error) {

gateway, err := wgateway.New(ctx, options(opts)...)

if err != nil {

return nil, err

}

registerRoutes(gateway)

return &Gateway{gateway}, nil

}

func options(opts []wgateway.GatewayOption) []wgateway.GatewayOption {

var gatewayOpts []wgateway.GatewayOption

gatewayOpts = append(gatewayOpts, wgateway.WithAuthAPI())

gatewayOpts = append(gatewayOpts, opts...)

return gatewayOpts

}

As you can see, the generated schema-specific NewGateway function consists of two parts:

- initializing the gateway with the options provided

- registering all routes with the gateway

Custom Options

The options function is where all custom options that are not route-specific are placed. In the example of our

feature-flags service, the only option listed is one that tells our wgateway package that it needs to use

our auth-api service for authentication for at least one of the routes.

A more complicated options setup could look something like this:

func options(opts []wgateway.GatewayOption) []wgateway.GatewayOption {

var gatewayOpts []wgateway.GatewayOption

gatewayOpts = append(gatewayOpts, wgateway.WithAuthAPI())

gatewayOpts = append(gatewayOpts, wgateway.WithPartnersAuth())

gatewayOpts = append(gatewayOpts, wgateway.WithOktaAuth())

gatewayOpts = append(gatewayOpts, wgateway.WithOktaClientID("0oa4clcj2kQvrXbyJ4l8"))

gatewayOpts = append(gatewayOpts, wgateway.WithEmitUnpopulatedMarshalOption())

gatewayOpts = append(gatewayOpts, opts...)

return gatewayOpts

}

This tells our wgateway package that when it initializes a new Gateway, it needs:

- an

auth-apiclient set up for at least one route - a

partners-authclient set up for at least one route - an

oktaauth client set up for at least one route with a specific Okta client id - the runtime marshaller to emit unpopulated fields

Routing Configuration

The routing configuration of our generated gateway file ties together all the routes from proto file with any custom options provided for each route. It also adds additional CORS preflight check routes to all unique paths. The custom options that are supported per-endpoint are:

- type of authentication

- specific ACLs

- predefined middlewares

Here are some examples of how an RPC can be configured in the proto file and what that translates to in the route

configuration section of the generated gateway.pb.go file.

Default

feature_flags.proto

rpc CreateFeatureFlag(CreateFeatureFlagRequest) returns (Flag) {

option (google.api.http) = {

post: "/v1/flags"

body: "flag"

};

}

feature-flags/gateway.pb.go

func registerRoutes(gateway *wgateway.Gateway) {

gateway.RegisterRoute(http.MethodPost, "/feature-flags/v1/flags")

gateway.RegisterCorsPreflightCheckRoute("/feature-flags/v1/flags")

//...

}

Auth Disabled

feature_flags.proto

rpc CreateFeatureFlag(CreateFeatureFlagRequest) returns (Flag) {

option (wv.schema.auth_method) = "disabled"; // custom option

option (google.api.http) = {

post: "/v1/flags"

body: "flag"

};

}

feature-flags/gateway.pb.go

func registerRoutes(gateway *wgateway.Gateway) {

gateway.RegisterUnauthenticatedRoute(http.MethodPost, "/feature-flags/v1/flags")

gateway.RegisterCorsPreflightCheckRoute("/feature-flags/v1/flags")

//...

}

Okta Auth

feature_flags.proto

rpc CreateFeatureFlag(CreateFeatureFlagRequest) returns (Flag) {

option (wv.schema.auth_method) = "okta"; // custom option

option (google.api.http) = {

post: "/v1/flags"

body: "flag"

};

}

feature-flags/gateway.pb.go

func registerRoutes(gateway *wgateway.Gateway) {

gateway.RegisterOktaAuthRoute(http.MethodPost, "/feature-flags/v1/flags")

gateway.RegisterCorsPreflightCheckRoute("/feature-flags/v1/flags")

//...

}

Custom ACL

feature_flags.proto

rpc CreateFeatureFlag(CreateFeatureFlagRequest) returns (Flag) {

option (wv.schema.permission) = FEATURE_FLAG_CREATE; // custom option

option (google.api.http) = {

post: "/v1/flags"

body: "flag"

};

}

feature-flags/gateway.pb.go

func registerRoutes(gateway *wgateway.Gateway) {

gateway.RegisterRoute(http.MethodPost, "/feature-flags/v1/flags", wgateway.WithACLPermission(waccesspb.Permission_FEATURE_FLAG_CREATE))

gateway.RegisterCorsPreflightCheckRoute("/feature-flags/v1/flags")

//...

}

One thing to note here is that all routes are prefixed with /feature-flags. This is because that is the location of

that schema in our centralized schema repository. This is the directory structure for the feature-flags schemas:

weave

└── schemas # ROOT OF API GATEWAY

└── feature-flags # v1

└── feature-flags.proto

└── v2

└── feature-flags.proto

So, if we were looking at the generated route configuration for the schema located in the weave/schemas/feature-flags/v2

directory, all the routes would be prefixed with feature-flags/v2.

Run Functionality

Once the wgateway.Gateway has been initialized, it needs to be run. As stated before, it can be run as a sidecar in

the deployment or directly in the code of the main app’s container in the deployment.

The Run method for the generated schema’s Gateway, looks like this:

// Run executes the gateway server for the FeatureFlags schema

func (g *Gateway) Run(ctx context.Context) error {

// initialize gRPC client connection to talk to the gRPC server that implements the service

conn, err := wgrpcclient.NewGatewayClient(ctx, g.TargetAddr(), g.UnaryInterceptors(), g.StreamInterceptors(), g.DialOpts()...)

if err != nil {

return werror.Wrap(err, "failed to establish grpc connection").Add("targetAddr", g.TargetAddr())

}

// register the http handlers contained in the initialized Gateway's runtime Mux and

// provide the initialized gRPC client connection to use when forwarding requests

err = RegisterFeatureFlagsHandler(ctx, g.RuntimeMux(), conn)

if err != nil {

return werror.Wrap(err, "failed to register grpc gateway handler")

}

// start the server

return g.ListenAndServe()

}

This is the simplest part of the entire generated gateway.pb.go file. All it does is:

- initialize a gRPC client connection

- register the HTTP handlers with the gRPC client connection

- start the server

One thing to note is that all the data we need to do this, is already contained in the initialized wgateway.Gateway

because of the default options combined with any custom options provided. So, we just simply take that data and

configuration from the Gateway and use it to create the gRPC client connection and to register that connection with

the HTTP handlers.

Quick Review

As stated above and in the previous post in the Generated Gateway section,

a Gateway can be run as a sidecar in the deployment or directly in the code of the main app’s container in the deployment.

In both cases, the code is the exact same. That code is as simple as calling the generated NewGateway function for a

particular schema, and then calling its Run method:

gateway, err := featureflagspb.NewGateway(ctx)

if err != nil {

// handle error

}

err = gateway.Run(ctx)

if err != nil {

// handle error

}

This allows service owners to run the Gateway directly within their service code. While this is not the majority, there are

still some use cases for doing this such as:

- Custom authentication

- Adding additional handlers to serve files (because serving files in gRPC is 💩)

- Custom runtime marshal functionality not yet supported by our custom options

The majority of services simply run the Gateway as a sidecar in their deployment and don’t ever have to worry about

the initialization and running of the Gateway. When the gateway.pb.go file is generated, there is also a gateway/main.go

file generated relative to its directory. This is the code that is built inside the Docker container that is used to run

the Gateway as a sidecar. This file is roughly 25 lines long and contains, more or less, the same exact code that is

required when running manually:

schemas

└── feature-flags

└── gateway

└── main.go # gateway sidecar

// Code generated by protoc-gen-weave-go. DO NOT EDIT.

package main

import (

...

pb "weavelab.xyz/schema-gen-go/schemas/feature-flags"

)

func main() {

...

gateway, err := pb.NewGateway(ctx)

if err != nil {

// handle error

}

err = gateway.Run(ctx)

if err != nil {

// handle error

}

}

In our case, the schema-gen-go repository is where all Go code lives that has been generated from our centralized

schema repository.

Running as a Sidecar

When running Gateway as a sidecar, the service owner simply needs to specify sidecar: true when defining their schema

in their WAML™. The WAML™, or .weave.yaml lives at the root of every service’s repository. For each service, it defines:

- The name of the service

- The team that owns it

- How to deploy it

- How to alert for it

- etc…

For this post, the important aspect of the WAML™ is the “schema” portion. This section specifies which schema the service is implementing, whether to attach it as a sidecar, and whether to make it public. A typical “schema” section would look something like:

schemas:

- path: feature-flags

sidecar: true

public: true

This is illustrated in the “Deployment Pipeline” section of the diagram below (the full explanation of this diagram can be found in the previous post):

So, to summarize where we currently are, when a commit is pushed to our “schema” repository, our generation API generates

all the code needed to initialize and run a Gateway service. Whether running directly in the service code or as a sidecar

in the deployment, the code is the same. Now, let’s look at how wgateway glues our generated Gateway code to grpc-gateway’s

runtime.ServeMux.

wgateway

wgateway wraps grpc-gateway’s runtime.ServeMux to provide some sugar around how it is initialized. The wgateway.New

function looks something like this:

// New initializes a new Gateway with the provided options

func New(ctx context.Context, opts ...GatewayOption) (*Gateway, error) {

// set the HTTP listen port

listenPort := defaultListenPort

if port := os.Getenv(ListenPortEnvKey); port != "" {

listenPort = port

}

// set the gRPC proxy port

proxyPort := defaultProxyPort

if port := os.Getenv(ProxyPortEnvKey); port != "" {

proxyPort = port

}

g := &Gateway{

listenPort: listenPort,

proxyPort: proxyPort,

unaryInterceptors: defaultUnaryInterceptors,

dialOpts: defaultDialOpts,

serveMuxOpts: defaultServeMuxOpts,

}

// apply custom options

for _, opt := range opts {

opt(g)

}

// this needs to happen AFTER the options are applied so that the WAML™ can override any default

// marshal options that are defined in the proto file

g.applyMarshalOptionOverrides(ctx)

// setup gRPC client to auth-api service if needed for authentication

if g.usesAuthAPI {

//...

}

// setup gRPC client to partners-auth service if needed for authentication

if g.usesPartnersAuth {

//...

}

// add Okta auth middleware if needed

if g.usesOktaAuth {

//...

}

// initialize runtime mux and add handlers

g.runtimeMux = runtime.NewServeMux(g.serveMuxOpts...)

for _, h := range g.handlers {

err := g.runtimeMux.HandlePath(h.method, h.pattern, h.handlerFunc)

if err != nil {

return nil, werror.Wrap(err, "HandlePath error").Add("pattern", h.pattern)

}

}

return g, nil

}

Most of this function is pretty self-explanatory on the surface and easy to follow (an explanation of each part of the function is listed below):

- Set the HTTP listen port and the gRPC proxy port

- Initialize a

Gatewaywith the default options - Apply any custom options

- Initialize the gRPC clients it needs for authentication

- Set up Okta auth middleware

- Initialize the

runtime.ServeMuxand registers the handlers

Sets the HTTP listen port and the gRPC proxy port

The default ports are used unless overridden from the “schema” section in the WAML™:

schemas:

- path: feature-flags

sidecar: true

public: true

options:

listen-port: 8080

proxy-port: 9090

Using these port override options sets a specific environment variable in the deployment that is read when wgateway.New

is called.

Initialize a Gateway with the default options

This allows us to add default functionality to every single Gateway such as:

- gRPC interceptor to add user agent metrics for incoming requests

runtime.ServeMuxOption- default error handlerruntime.ServeMuxOption- extract known HTTP headers and embed them into the context metadata- etc..

Apply any custom options

This is where we apply any of the custom options that come from the gateway.pb.go file. If you remember, the options

portion of that file looks something like this:

// Code generated by protoc-gen-weave-go. DO NOT EDIT.

// Gateway is a personalized gateway for the FeatureFlags service

type Gateway struct {

*wgateway.Gateway

}

// NewGateway returns an initialized Gateway with the provided options for the FeatureFlags schema

func NewGateway(ctx context.Context, opts ...wgateway.GatewayOption) (*Gateway, error) {

gateway, err := wgateway.New(ctx, options(opts)...)

if err != nil {

return nil, err

}

//...

return &Gateway{gateway}, nil

}

func options(opts []wgateway.GatewayOption) []wgateway.GatewayOption {

var gatewayOpts []wgateway.GatewayOption

gatewayOpts = append(gatewayOpts, wgateway.WithAuthAPI())

gatewayOpts = append(gatewayOpts, opts...)

return gatewayOpts

}

//...

In this example, you can see that we are providing the custom option wgatway.WithAuthAPI. That function simply tells

the Gateway to initialize an auth-api gRPC client:

func WithAuthAPI() GatewayOption {

return func(g *Gateway) {

g.usesAuthAPI = true

}

}

All of our other custom options work in a similar same way.

Initialize any gRPC clients needed for authentication

Like we just saw, when custom options are provided for specific authentication, it simply sets a flag on the Gateway

telling it which auth clients it needs to initialize. As shown in the previous example, providing the wgateway.WithAuthAPI

custom option sets the Gateway’s usesAuthAPI field to true. So, when it evaluates g.usesAuthAPI it will know it needs

to initialize a gRPC client connection to the auth-api service to use for authentication on at least one route.

Set up Okta auth middleware

Setting up Okta middleware is done in the exact same way as the other custom options. If the wgateway.WithOktaAuth

custom option is provided, the Gateway’s usesOktaAuth field is set to true and then attaches the middleware when that

code block is reached.

func WithOktaAuth() GatewayOption {

return func(g *Gateway) {

g.usesOktaAuth = true

}

}

Initialize the runtime.ServeMux and registers the handlers

This is the section that requires the most explanation. This is where we add any of the routes that were registered in

the gateway.pb.go file. If you remember, the routing portion of that file could look something like this (illustrating

all possible route types):

// Code generated by protoc-gen-weave-go. DO NOT EDIT.

// Gateway is a personalized gateway for the FeatureFlags service

type Gateway struct {

*wgateway.Gateway

}

// NewGateway returns an initialized Gateway with the provided options for the FeatureFlags schema

func NewGateway(ctx context.Context, opts ...wgateway.GatewayOption) (*Gateway, error) {

gateway, err := wgateway.New(ctx, options(opts)...)

if err != nil {

return nil, err

}

registerRoutes(gateway)

return &Gateway{gateway}, nil

}

func registerRoutes(gateway *wgateway.Gateway) {

gateway.RegisterRoute(http.MethodPost, "/feature-flags/v1/flags", wgateway.WithACLPermission(waccesspb.Permission_FEATURE_FLAG_CREATE))

gateway.RegisterUnauthenticatedRoute(http.MethodPost, "/feature-flags/v1/unauthed")

gateway.RegisterOktaAuthRoute(http.MethodPost, "/feature-flags/v1/okta")

gateway.RegisterPartnersAuthRoute(http.MethodPost, "/feature-flags/v1/partners")

//...

}

//...

As you can see, there are Register functions for each type of route. The registration methods simply add the route to

the route map on the Gateway. This allows us to evaluate each request that comes in and apply the particular auth, ACL,

or middleware that each route calls for before passing it along the middleware chain.

Conclusion

We have spent A LOT of time developing, what we think to be, a world-class schema-first generation pipeline. Our engineers don’t have to worry about all the little details. They simply construct their proto files and define their API contracts. The rest is up to the DevX, or Developer Experience, team. We ensure that the correct code is generated and that there is a Docker container built for every single state of their proto file on any branch. For more information about what our team does, see the Platform Engineering at Weave post.

I hope this post has been informative. I know it was a little (very) dense with code examples. However, I have grown tired of reading extremely high-level blog posts about cool solutions to challenging technical problems that leave you asking, “Ok, so how can I do something like this at my company?”. So, I decided to explain exactly how we built this system.

Here at Weave we are always looking for passionate and creative engineers to join us. Take a look at our Careers page and see if anything peeks your interest. You never know, maybe soon you’ll be using this system yourself as a new Weave engineer!